It Takes A Village: The Case for Multi-Agentic AI

As AI agent technology matures some patterns are emerging. Agents have proven themselves indisputably useful in the coding space, and likely they just have to conquer this space before they conquer the world. Agents appear most useful not as “autonomous” ersatz people or anthropomorphized mock employees, but as contextually adaptable, automated workflow handlers. That is to say, the most effective agentic solutions are ones that don’t try to imitate a person, but instead that are built more like traditional programs operating over well-defined workflows, and that respect time-honored software design principles like the single responsibility principle, interface segregation, modularity, etc.

If we follow these design principles and take them seriously and treat agents as programs, we’re led to the conclusion that we shouldn’t stuff too many responsibilities into a single agent-we should modularize. Any complex workflow, therefore, might better be served by a sequence of agents each with a single responsibility and a communication interface between them. Hence, I’m led to the belief that the only right way to do agentic AI is multi-agentic AI.

Despite the logical conclusion that the only way to do agentic AI is to make it multi-agentic, the multi approach has problems with coordination and scalability that still need to be worked out. (You could become very rich if you did figure it out, by the way.) Taking all that into account, let’s review what works and what doesn’t.

Why Lone Wolfing it is A Bad Idea

Unless you have a relatively simple workflow it’s probably a bad idea to overburden a lone agent with too many responsibilities. We’re not at AGI yet, and these LLMs can get lost in labyrinths of context of their own creation without the proper guardrails. If you’re using a single agent to do a lot, ask yourself if this process could not be improved by dividing tasks up between single-purpose specialist agents.

It Takes Two to Tango: the Brain-Hand Pattern

One smart pattern is to have a big, powerful but expensive model like Claude Opus or o3 serve as the “brain” and do the planning, solution proposing, and analysis, and a smaller, lighter, but cheaper model to serve as the “hand” and do the extended instruction following and execution. This is far more cost effective than having the big model power through everything, and it yields better results than having the smaller model bite off more than it can chew. This “the head thinks, the hand acts” pattern is generally quite sound, and since it only requires a pair of models working in close proximity, it’s generally manageable in terms of coordination complexity.

If you look at a well-designed agentic workflow like Claude Code, you’ll see that it’s making calls to Haiku under the hood, showing evidence of this pattern in action.

Ideally, your agents should be decoupled, and only interact through well defined API contracts. If you divide agents by task, you’ll need communication protocols for them to share information. This part is tricky, and I don’t think anyone has figured out yet how to do it optimally. Probably the solution is to not have the agents have sprawling conversations and debates with each other like people would, but to build predictable interfaces for exchanging structured outputs, again much like regular software modules. This coordination and communication problem compounds exponentially the more agents you have, which is why for now it generally seems best to limit it to a few tight-nit agents rather than a vast fleet.

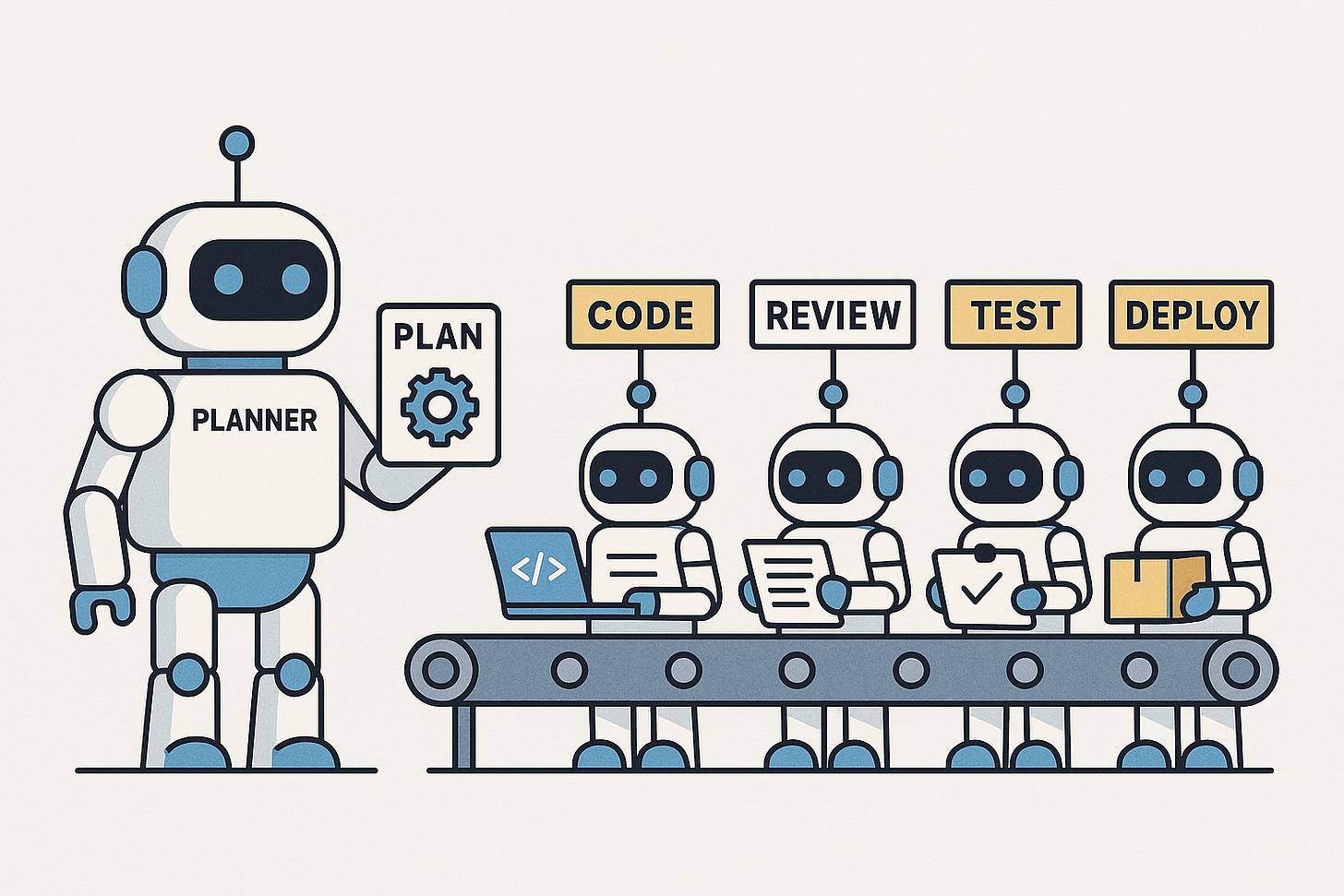

Assembly Line

Because complex heterarchical (many-to-many) communication protocols are difficult to coordinate, another good multi-agent pattern is the assembly line. A planner agent might set the agenda, and then each agent in the workflow does its job and passes the result forward, resulting in a progressively developing product. Each agent has its own tools and logic for doing what it does well. Perhaps the final agent inspects the finished product, and if it meets specifications, approves it. Otherwise it might get kicked back to the first step.

Synchronized Swimming: The Benefits of Parallelism

Another distinct advantage of a multi-agent setup is that it can be parallelized. Configuring and launching multiple threads for a set of agents means tasks can be accomplished that much faster. However, caution is advised if these agents must compete over shared resources or coordinate their work. As with all things collaborative, careful task definition and assignment is essential so that agents aren’t interfering with each other. Setting a bunch of agents loose on a codebase can be a great way to destroy it if they aren’t channeled properly. So far, none of the solutions I’ve seen on the market have really build a sophisticated synchronization mechanism to handle large, parallel agentic workflows, so there’s a major opportunity for whoever can get there first.

It’s widely assumed that these LLMs will continue to improve with time, and that brute power will override any need for clever architecting. Eventually one single model will be so powerful it can do it all (Although it’s hard to see this ever simultaneously working on parallel tasks.) But if we treat AI agents as software, such monolithic designs screams anti-pattern. The challenge currently is that, to my knowledge, no one has figured out how to solve the coordination and task delegation problems scalably. There may come a day when we have hundreds or even thousands of these things operating together in synchronized swarms to accomplish superhuman tasks, but for now the full promise of multi-agentic AI is beyond reach.